- #HOW TO INSTALL PYSPARK IN JUPYTER NOTEBOOK HOW TO#

- #HOW TO INSTALL PYSPARK IN JUPYTER NOTEBOOK INSTALL#

- #HOW TO INSTALL PYSPARK IN JUPYTER NOTEBOOK WINDOWS#

I pressed cancel on the pop-up as blocking the connection doesn’t affect PySpark.

#HOW TO INSTALL PYSPARK IN JUPYTER NOTEBOOK WINDOWS#

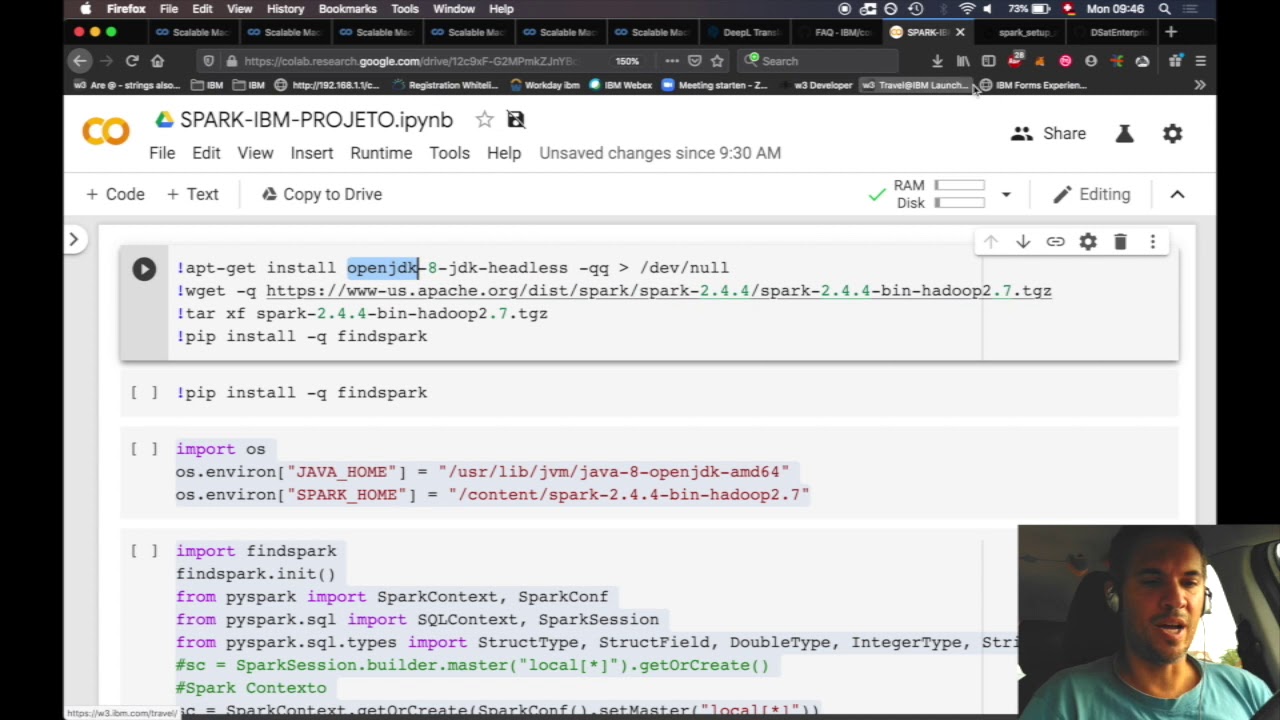

When you press run (shift+enter ), it might trigger a Windows firewall pop-up. Spark = ()ĭf = spark.sql('''select 'spark' as hello ''') Import pyspark # only run after findspark.init() Once inside Jupyter notebook, open a Python 3 notebook If you use Anaconda Navigator to open Jupyter Notebook instead, To run Jupyter notebook, open Windows command prompt or Git Bash and run jupyter notebook. (Optional, if see Java related error in step C) Find the installed Java JDK folder from step A5, for example, C:\Program Files\Java\jdk1.8.0_121, and add the following environment variable Java SE Development Kit Scala Build Tool Spark 1.5.

#HOW TO INSTALL PYSPARK IN JUPYTER NOTEBOOK INSTALL#

In the same environment variable settings window, look for the Path or PATH variable, click edit and add At a high level, these are the steps to install PySpark and integrate it with Jupyter notebook: Install the required packages below Download and build Spark Set your enviroment variables Create an Jupyter profile for PySpark Required packages. You can find the environment variable settings by putting “environ…” in the search box. If you are using a Unix derivative (FreeBSD, GNU / Linux, OS X), you can achieve this by using export PATH. If installing using pip install -user, you must add the user-level bin directory to your PATH environment variable in order to launch jupyter lab. Move the winutils.exe downloaded to the \bin folder of Spark distribution.įor example, C:\LOCAL_SPARK\spark-2.4.4-bin-hadoop2.7\bin\winutils.exeĪdd environment variables: the environment variables let Windows find where the files are when we start the PySpark kernel. If you use pip, you can install it with: pip install jupyterlab. For example, I unpacked with 7zip from step A6 and put mine under tgz file from Spark distribution in item 1 by right-clicking on the file icon and select 7-zip > Extract Here.Īfter getting all the items, let’s set up PySpark. tgz file on Windows, you can download and install 7-zip on Windows to unpack the. I recommend getting the latest JDK (version 1.8) If you don’t have Java or your Java version is 7.x or less, download and install Java from Oracle.

You can find command prompt by searching cmd in the search box. The findspark Python module, which can be installed by running python -m pip install findspark either in Windows command prompt or Git bash if Python is installed. Go to the corresponding Hadoop version in the Spark distribution and find winutils.exe under /bin.

Winutils.exe - a Hadoop binary for Windows - from Steve Loughran’s GitHub repo. You can get both by installing the Python 3.x version of Anaconda distribution.

#HOW TO INSTALL PYSPARK IN JUPYTER NOTEBOOK HOW TO#

In this, I will show you how to install and run PySpark locally in Jupyter Notebook on Windows. When I write PySpark code, I use Jupyter notebook to run my code.

Pyspark // learning pyspark How to Install and Run PySpark in Jupyter Notebook on Windows

0 kommentar(er)

0 kommentar(er)